Jan 6, 2026

Google vs. SERP APIs: Why Linkup Users Stay Safe

Why Linkup remains a safe and reliable alternative after Google's lawsuit against SerpApi

Boris

COO at Linkup

In December 2025, Google filed a lawsuit against SerpApi in U.S. federal court, alleging violations of the Computer Fraud and Abuse Act and breach of contract. Google is no longer relying only on technical countermeasures against SERP scraping, but is now enforcing its position through litigation.

For teams relying on SERP APIs, this lawsuit changes the nature of the risk. What was previously treated as a technical inconvenience is now a legal and compliance problem.

What are SERP services

SERP (Search Engine Results Page) services provide programmatic access to search engine results by automatically querying Google and extracting data from the results pages. In practice, they scrape rankings, links, and snippets from search engines like Google.

What Google is alleging

According to Google’s public filing, SerpApi:

Accessed Google systems without authorization

Violated Google’s Terms of Service through automated scraping

Circumvented protections designed to prevent scraping

Generated more than 11 billion automated queries

Google’s position is explicit: SERP scraping is unauthorized access, not a tolerated use case.

Why this lawsuit changes the risk model

Before this lawsuit, SERP scraping existed in a gray zone. Teams accepted the trade-offs: CAPTCHAs, proxy costs, unstable parsing, occasional downtime. Legal exposure was abstract and rarely discussed.

That is no longer the case. If Google prevails, SERP scraping becomes clearly associated with CFAA liability. This has second-order consequences that matter more than the lawsuit itself.

Enterprise compliance teams increasingly review whether vendors violate third-party Terms of Service. SOC 2 and ISO 27001 audits now include these questions. Cyber insurance policies often exclude coverage for “knowing violations” of access restrictions. A public lawsuit removes plausible deniability.

The core problem: SERPs are built for humans, not AI

SERP APIs fundamentally extract data from interfaces designed for human browsing. Search engines are optimized for clickable links, ads, rankings, and SEO-driven content.

AI systems do not need links. They need context.

Using SERPs as an input layer for AI systems is a mismatch by design. You are forcing AI to consume outputs meant for humans, then reconstruct meaning from pages that were never intended to be machine-native.

This is why SERP scraping is brittle technically, and why it is increasingly untenable legally.

Our position: AI-Native search requires a different architecture

Linkup is not a SERP API. We do not scrape search engines.

We operate as a web search API. We crawl the open web directly, respect web standards, and build an index designed for retrieval-augmented generation.

The process is straightforward by design. We crawl the web, identify relevant content, split it into meaningful chunks, vectorize those chunks, and make them retrievable using vector distance. This allows AI systems to retrieve the right context directly, without relying on rankings, ads, or SEO artifacts.

Because of this approach, we intentionally exclude large classes of content that exist purely for human browsing: ad-heavy pages, SEO-first articles, and ranking-optimized filler.

Compliance and sustainability are architectural choices

Linkup respects robots.txt across all crawled domains. We do not crawl sites that prohibit it. Our technology is not designed to bypass protections, rate limits, or access controls.

We also believe that AI search must include economic alignment. This is why we partner with infrastructure and monetization players such as Cloudflare and Tollbit. These partnerships enable compensation mechanisms for rights holders and cover a significant portion of protected websites.

SERP APIs vs. Web Search APIs

SERP APIs extract data from Google’s result pages. They depend on scraping, proxy infrastructure, and continuous adaptation to interface changes. The legal risk flows directly from that dependency.

Web search APIs crawl and index the web independently. They retrieve content directly and return it in a machine-native format. When built correctly, they respect web protocols and avoid Terms of Service violations by design.

This distinction is not cosmetic. It determines long-term reliability, legal exposure, and cost structure.

Why teams are replacing SERP APIs with Linkup

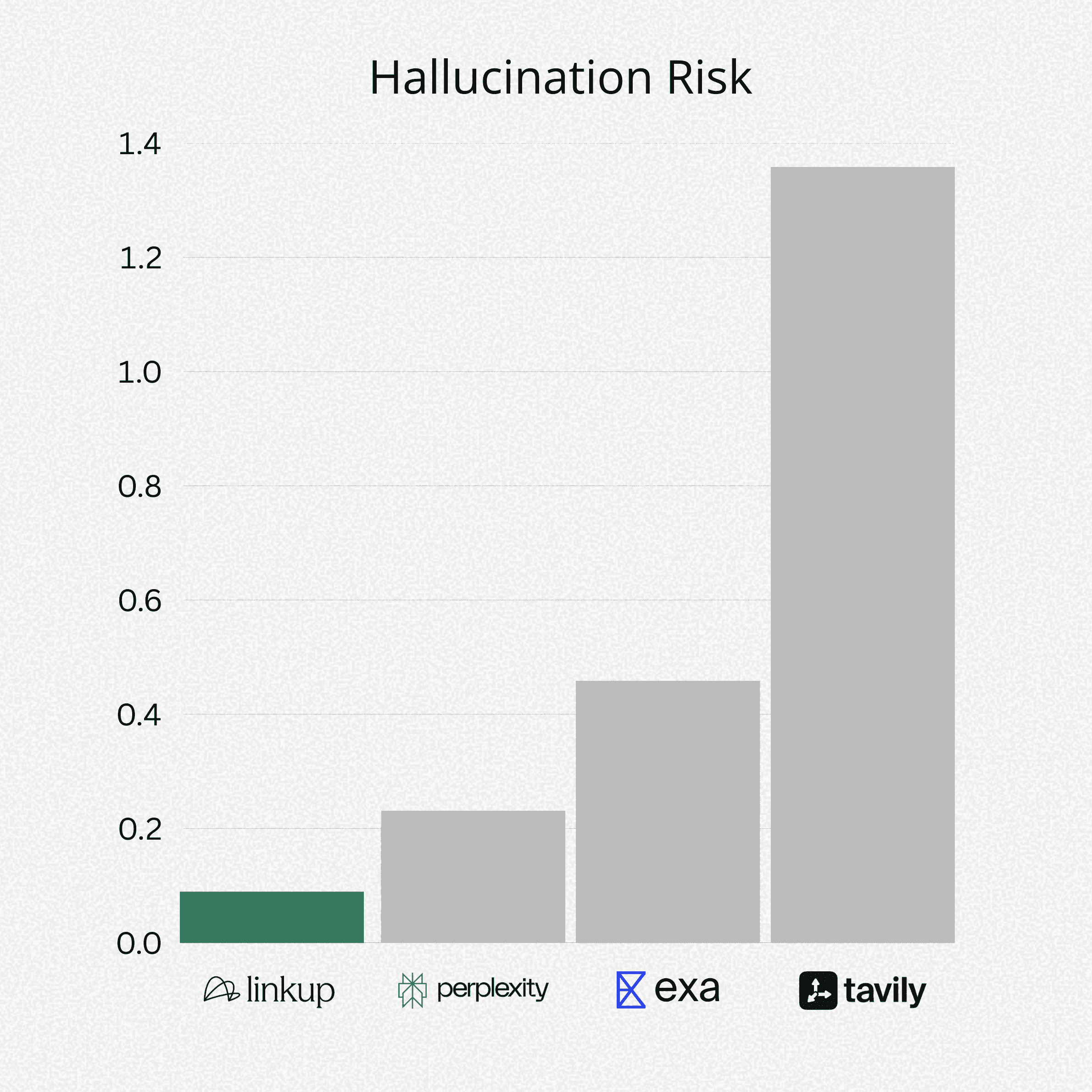

For most use cases, Linkup is a drop-in replacement. The output is structured, and the functional goal is the same: programmatic access to current web information.

Migration typically requires changing endpoints and authentication, simplifying the pipelines more than rethinking product logic. We've detailed how valuable is the alternative in one of our previous blog post

The difference is architectural. Linkup removes the need for scraping, proxies, CAPTCHA solving, and fragile parsing logic. It also removes a growing category of legal and compliance risk.

Stay safe

Google’s lawsuit draws a clear line between two models. One depends on extracting data from search engine interfaces. The other operates its own search infrastructure. Linkup falls into the second category. We do not rely on Google’s results pages, rankings, or interfaces. We operate a proprietary, AI-native web index built through direct crawling and retrieval.

Because of that, the legal, compliance, and operational risks now associated with SERP APIs do not transfer to Linkup users. The system is more predictable, more reliable, and designed for how AI systems actually consume information. The lawsuit did not change our architecture. It made the difference easier to see.